The Kinect for XBox One Workshop

Additional Topics to Explore

Saving Body Data to a Text FileThe skeleton and face data for each tracked body is available at several points in the application code, in particular, when a Body is about to be drawn.

Matlab and other applications often use *.csv files as their input. In C#, a *.csv file is simply a text file in which the output data items on each line are separated by commas.

Here is an example of a function that writes one record for a Body to a *.csv file:

void writeOneBodyOutput(TimeSpan ts, Body outputBody)

{

// Reference: https://msdn.microsoft.com/en-us/library/windowspreview.kinect.body.aspx

// The Body Class has a number of members, some of which are:

//

// Various hand data items:

// HandLeftConfidence, of type enum TrackingConfidence (High=1, fully tracked/Low=0, not tracked)

// HandLeftState, of type enum HandState( Unknown=0, NotTracked=1, Open=2, Closed=3, Lasso=4)

// HandRightConfidence, of type enum TrackingConfidence (High=1, fully tracked/Low=0, not tracked)

// HandRightState, of type enum HandState( Unknown=0, NotTracked=1, Open=2, Closed=3, Lasso=4)

// IsRestricted, a boolean value

// IsTracked, a boolean value

// JointOrientations, the joint orientations of the body, stored in a read-only dictionary indexed by JointType.

// Each dictionary entry contains JointType and Orientation. Orientation is a Vector4 value.

// Joints, the Joint positions of the body, a read-only dictionary indexed by JointType.

// Each dictionary entry contains JointType and Joint. Joint contains JointType, Position (in Camera space),

// and TrackingState.

// Lean, the lean vector of the body

// LeanTrackingState, the tracking state for body lean

// TrackingId, the assigned Tracking ID for the body

// In this function we will write one line of comma-separated data containing some/all of the body data.

// The TimeSpan is passed in as an input parameter, it was obtained from the BodyFrame data

outFile.Write(ts.TotalMilliseconds + ",");

// IsTracked

outFile.Write(outputBody.IsTracked + ",");

// HandLeftConfidence & State

outFile.Write(outputBody.HandLeftConfidence + ",");

outFile.Write(outputBody.HandLeftState + ",");

// HandRightConfidence & State

outFile.Write(outputBody.HandRightConfidence + ",");

outFile.Write(outputBody.HandRightState + ",");

// IsRestricted

outFile.Write(outputBody.IsRestricted + ",");

// JointOrientations

for (int k = 1; k < jointNames.Length; k++) // Use indices 1..24

{

JointType key = getJointTypeValue(k);

JointOrientation value;

if (outputBody.JointOrientations.TryGetValue(key, out value))

{

//outFile.Write(value.JointType + ","); // <-- One of the fields in Orientation

outFile.Write(value.Orientation.X + ",");

outFile.Write(value.Orientation.Y + ",");

outFile.Write(value.Orientation.Z + ",");

outFile.Write(value.Orientation.W + ",");

}

else

{

outFile.Write(",,,,");

}

}

// Joints

for (int k = 1; k < jointNames.Length; k++) // Use indices 1..24

{

JointType key = getJointTypeValue(k);

Joint joint = outputBody.Joints[key];

outFile.Write(joint.TrackingState.ToString() + ",");

outFile.Write(joint.Position.X + ",");

outFile.Write(joint.Position.Y + ",");

outFile.Write(joint.Position.Z + ",");

}

// Lean Tracked

outFile.Write(outputBody.LeanTrackingState + ",");

// Lean

PointF leanVector = outputBody.Lean;

outFile.Write(leanVector.X + ",");

outFile.Write(leanVector.Y + ",");

}

Matlab and other applications often require that a *.csv file contain column labels. Here is an example of a function that writes the column labels for the data records written by the previous function:

void writeOutputLineLabels()

{

// Reference: https://msdn.microsoft.com/en-us/library/windowspreview.kinect.body.aspx

// The Body Class has a number of members, some of which are:

//

// Various hand data items,

// IsTracked, a boolean value

// JointOrientations, the joint orientations of the body

// Joints, the Joint positions of the body

// Lean, the lean vector of the body

// LeanTrackingState, the tracking state for body lean

// TrackingId, the assigned Tracking ID for the body

// In this function we will write one line of comma-separated data containing some/all if the body data LABELS.

// The TimeSpan is passed in as an input parameter, it was obtained from the BodyFrame data

outFile.Write("TimeSpan,");

// IsTracked

outFile.Write("IsTracked,");

// HandConfidence & State

outFile.Write("HandLeftConfidence,HandLeftState,HandRightConfidence,HandRightState,");

// IsRestricted

outFile.Write("IsRestricted,");

// Joint Orientations

for (int k = 1; k < jointNames.Length; k++)

{

outFile.Write(jointNames[k] + "OrientationX,");

outFile.Write(jointNames[k] + "OrientationY,");

outFile.Write(jointNames[k] + "OrientationZ,");

outFile.Write(jointNames[k] + "OrientationW,");

}

// Joint Positions

for (int k = 1; k < jointNames.Length; k++)

{

outFile.Write(jointNames[k] + "TrackingState,");

outFile.Write(jointNames[k] + "PositionX,");

outFile.Write(jointNames[k] + "PositionY,");

outFile.Write(jointNames[k] + "PositionZ,");

}

// Lean Tracking State

outFile.Write("LeanTrackingState,");

// Lean Vector

outFile.Write("LeanVectorX,");

outFile.Write("LeanVectorY,");

// Face data

for (int k = 0; k < facePropertyNames.Length; k++)

if (k < facePropertyNames.Length - 1)

outFile.Write(facePropertyNames[k] + ",");

else

outFile.Write(facePropertyNames[k]); // <-- No terminating comma

outFile.Write("FaceYaw,");

outFile.Write("FacePitch,");

outFile.Write("FaceRoll");

// End the line

outFile.WriteLine();

}

Often it is best to write all the Body data so that an application using that data can select whatever data items are needed. In other cases it may only be necessary to write a small subset of the available body data. A Dialog that allowed a Kinect application user to select all or some of the available data items to be recorded would be a helpful addition.

C# Network Programming

Because only a single Kinect for XBox One sensor may be connected to any computer (read discussion here), it may be required to collect multi-sensor data in a single location (a server, or a computer with a sensor that is designated as a server).

Communication Through Sockets is a Microsoft sample application that will show you the simplest way to transfer messages through Sockets between many connected users on the same network.

A sample C# program that demonstrates using a TcpListener to create a multi-threaded server, and a sample C# program that demonstrates using a TcpClient to create a suitable client is available. The code in those programs can be used to develop a Kinect application that, for example, sends multi-sensor skeleton data to a central location.

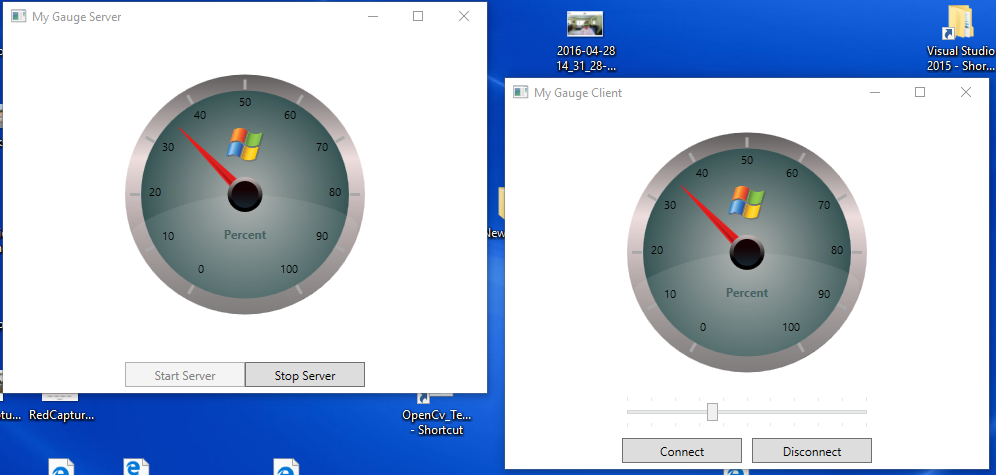

A second C# program pair that demonstrates a "closed-loop" system formed by a server and a client, each containing a Gauge control is also available. The code in those programs can be used to develop a Kinect client application that sends skeleton data to a server where, for example, the head yaw, pitch and roll angles are displayed using three Gauge controls. The Visual Studio Project files may be downloaded here.

Here is a screen shot of the client/server gauge programs in action:

After starting the GaugeServer program, clicking the Start Server button allows the server to listen for an incoming connection. Then after starting the GaugeClient program and clicking the Connect button, a connection between the client and the server is established.

Sliding the client control to a new value causes that value to be transmitted to the server where it is displayed on the server's gauge. The server then transmits the received value back to the client, where the returned value is displayed on the cliient's gauge. This demonstrates the closed-loop nature of the client/server system.

OpenCV

OpenCV (Open Source Computer Vision Library) is an open source computer vision and machine learning software library. OpenCV was built to provide a common infrastructure for computer vision applications and to accelerate the use of machine perception in the commercial products. Being a BSD-licensed product, OpenCV makes it easy for businesses to utilize and modify the code.

It has C++, C, Python, Java and MATLAB interfaces and supports Windows, Linux, Android and Mac OS. OpenCV leans mostly towards real-time vision applications and takes advantage of MMX and SSE instructions when available. A full-featured CUDA and OpenCL interfaces are being actively developed right now. There are over 500 algorithms and about 10 times as many functions that compose or support those algorithms. OpenCV is written natively in C++ and has a templated interface that works seamlessly with STL containers.

I've downloaded and installed OpenCV on my laptop and I've started to study the OpenCV Tutorial.

OpenCV programs may be written using C++, so Visual Studio Community Edition 2015 may be used to create them.

OpenCV may also be useful because the alternative programming language for Kinect is C++ and SDK 2.0 C++ samples are available.

Emgu CV

Emgu CV is a cross platform .Net wrapper to the OpenCV image processing library that allows OpenCV functions to be called from .NET compatible languages such as C#, VB, VC++, IronPython etc. The wrapper can be compiled by Visual Studio, Xamarin Studio and Unity, it can run on Windows, Linux, Mac OS X, iOS, Android and Windows Phone.

Instructions for downloading and installing Emgu CV in a Windows/Visual Studio .NET environment are available.

Samples are also provided in a Code Gallery and one sample demonstrates capture and display of a Webcam image stream.

I've downloaded and installed Emgu CV on my laptop and built the sample EmguCV programs using Visual Studio Community Edition 2015.

I've also created a few samples of my own to try face tracking. They work, but at this stage the processing time is too slow for any real-time application.

Saving a Bitmap Image stream as a Windows *.wpm or *.avi Video File

I need to learn how this is done. The typical webcam application on any computer always has this feature.

Saving a single Bitmap image as a "snapshot" *.jpg (or other image file type) is easily done.

One possibility is Aforge.NET.

AForge.NET is an open source C# framework designed for developers and researchers in the fields of Computer Vision and Artificial Intelligence - image processing, neural networks, genetic algorithms, fuzzy logic, machine learning, robotics, etc.

The framework is comprised by the set of libraries and sample applications, which demonstrate their features:

- AForge.Imaging - library with image processing routines and filters;

- AForge.Vision - computer vision library;

- AForge.Video - set of libraries for video processing;

- AForge.Neuro - neural networks computation library;

- AForge.Genetic - evolution programming library;

- AForge.Fuzzy - fuzzy computations library;

- AForge.Robotics - library providing support of some robotics kits;

- AForge.MachineLearning - machine learning library;

- etc.

Here is a sample using the VideoFileWriter class to write a sequence of 1000 bitmap images, each 320 x 240 pixels, to the file named test.avi.

I need to download and test this framework as it seems to provide an easy way to record Kinect images.

In my current Kinect C# WPF applications, the Face and Skeleton images are displayed to the user after being drawn on a Canvas control having a transparent background. Those canvases are placed over the Color camera image in the application's GUI.

int width = 320;

int height = 240;

// create instance of video writer

VideoFileWriter writer = new VideoFileWriter( );

// create new video file

writer.Open( "test.avi", width, height, 25, VideoCodec.MPEG4 );

// create a bitmap to save into the video file

Bitmap image = new Bitmap( width, height, PixelFormat.Format24bppRgb );

// write 1000 video frames

for ( int i = 0; i < 1000; i++ )

{

image.SetPixel( i % width, i % height, Color.Red );

writer.WriteVideoFrame( image );

}

writer.Close( );

Polling vs. Event Handling

The alternative to handling frame arrival events is to use Kinect's capability for polling to get the most recent frame data as required.

Here is a sample program that uses a polling technique to display the color camera image and current time every 100 milliseconds. The completed Visual Studio solution file is here: WPFColorPolling.zip.

Here is the content of MainWindow.xaml:

<Window x:Class="WPFColorPolling.MainWindow"

xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

xmlns:local="clr-namespace:WPFColorPolling"

mc:Ignorable="d"

Title="WPF Color Polling" Height="350" Width="525" Loaded="Window_Loaded" Closing="Window_Closing">

<Grid>

<Grid.RowDefinitions>

<RowDefinition Height="9*"/>

<RowDefinition Height="1*"/>

</Grid.RowDefinitions>

<Image Grid.Row="0" Name="camera"/>

<TextBlock Grid.Row="1" Name="textBlock1" Height="20" HorizontalAlignment="Center" VerticalAlignment="Center"/>

</Grid>

</Window>

Here is the content of MainWindow.xaml.cs:

using System;

using System.ComponentModel;

using System.Threading;

using System.Windows;

using Microsoft.Kinect;

using System.Windows.Media;

using System.Windows.Media.Imaging;

namespace WPFColorPolling

{

/// <summary>

/// Interaction logic for MainWindow.xaml

/// </summary>

public partial class MainWindow : Window

{

BackgroundWorker worker = null;

KinectSensor sensor = null;

ColorFrameSource colorFrameSource = null;

ColorFrameReader colorFrameReader = null;

ColorFrame colorFrame = null;

public MainWindow()

{

InitializeComponent();

}

private void Window_Loaded(object sender, RoutedEventArgs e)

{

sensor = KinectSensor.GetDefault();

colorFrameSource = sensor.ColorFrameSource;

colorFrameReader = colorFrameSource.OpenReader();

sensor.Open();

// Create a Thread or use a BackgroundWorker

worker = new BackgroundWorker();

worker.DoWork += worker_DoWork;

worker.RunWorkerCompleted += worker_RunWorkerCompleted;

worker.ProgressChanged += worker_ProgressChanged;

worker.WorkerReportsProgress = true;

worker.WorkerSupportsCancellation = true;

textBlock1.Text = "";

worker.RunWorkerAsync();

}

private void worker_DoWork(object sender, DoWorkEventArgs e)

{

while (true)

{

// run all background tasks here

Thread.Sleep(100); // 0.1 seconds

colorFrame = colorFrameReader.AcquireLatestFrame();

worker.ReportProgress(0); // Can pass int percent and object userState

}

}

private void worker_RunWorkerCompleted(object sender, RunWorkerCompletedEventArgs e)

{

// Update ui as the worker makes progress on his work

if (colorFrame != null)

{

camera.Source = ToBitmap(colorFrame);

colorFrame.Dispose(); // <-- IMPORTANT - This was needed to make this all work

displayTime();

}

}

private void worker_ProgressChanged(object sender, ProgressChangedEventArgs e)

{

// Update ui as the worker makes progress on his work

if (colorFrame != null)

{

camera.Source = ToBitmap(colorFrame);

colorFrame.Dispose(); // <-- IMPORTANT - This was needed to make this all work

displayTime();

}

}

private void displayTime()

{

int hour = DateTime.Now.ToLocalTime().Hour;

int minute = DateTime.Now.ToLocalTime().Minute;

int second = DateTime.Now.ToLocalTime().Second;

int millisecond = DateTime.Now.ToLocalTime().Millisecond;

textBlock1.Text = String.Format("{0}:{1}:{2}.{3}", hour, minute, second, millisecond);

}

private void Window_Closing(object sender, System.ComponentModel.CancelEventArgs e)

{

worker.CancelAsync();

worker.Dispose();

sensor.Close();

}

public ImageSource ToBitmap(ColorFrame frame)

{

int width = frame.FrameDescription.Width;

int height = frame.FrameDescription.Height;

PixelFormat format = PixelFormats.Bgr32;

byte[] pixels = new byte[width * height * ((format.BitsPerPixel + 7) / 8)];

if (frame.RawColorImageFormat == ColorImageFormat.Bgra)

{

frame.CopyRawFrameDataToArray(pixels);

}

else

{

frame.CopyConvertedFrameDataToArray(pixels, ColorImageFormat.Bgra);

}

int stride = width * format.BitsPerPixel / 8;

return BitmapSource.Create(width, height, 96, 96, format, null, pixels, stride);

}

}

}

Here is a screen shot of the program's output:

Matlab Kinect Inter-Operability

Matlab 2016 now has the ability to read data from a Kinect for XBox One sensor. A Matlab polling technique is used.

Here is the Matlab reference.

Here is a Matlab example: Preview color and depth streams from the Kinect for Windows v2 simultaneously.

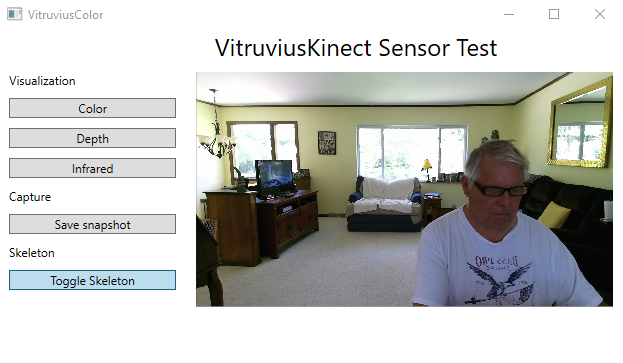

Vitruvius Kinect

VitruviusKinect is a framework that simplifies Kinect/WPF programming. It provides a

A free download is available at GitHub.

Here is the code for a sample program that uses VitruviusKinect. The completed Visual Studio solution file is here: VitruviusColor.zip.

This is the content of MainWindow.xaml:

<Window x:Class="VitruviusColor.MainWindow"

xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

xmlns:local="clr-namespace:VitruviusColor"

xmlns:controls="clr-namespace:LightBuzz.Vitruvius.Controls;assembly=LightBuzz.Vitruvius"

mc:Ignorable="d"

Title="VitruviusColor" Height="480" Width="640" Loaded="Window_Loaded" Unloaded="Window_Unloaded">

<Grid>

<Grid.RowDefinitions>

<RowDefinition Height="auto"/>

<RowDefinition Height="auto"/>

</Grid.RowDefinitions>

<!-- Back button and page title -->

<Grid Grid.Column="0">

<Grid.ColumnDefinitions>

<ColumnDefinition Width="90"/>

<ColumnDefinition Width="*"/>

</Grid.ColumnDefinitions>

<TextBlock x:Name="pageTitle" Text="VitruviusKinect Sensor Test" Grid.Column="1"

IsHitTestVisible="false" TextWrapping="NoWrap" HorizontalAlignment="Center"

VerticalAlignment="Center" FontSize="24" />

</Grid>

<Grid Grid.Row="1">

<Grid.ColumnDefinitions>

<ColumnDefinition Width="3*" />

<ColumnDefinition Width="7*" />

</Grid.ColumnDefinitions>

<StackPanel Grid.Column="0" Margin="10,0">

<TextBlock Text="Visualization" Margin="0,10" />

<Button Content="Color" HorizontalAlignment="Stretch" Click="Color_Click" />

<Button Content="Depth" Margin="0,10" HorizontalAlignment="Stretch" Click="Depth_Click" />

<Button Content="Infrared" HorizontalAlignment="Stretch" Click="Infrared_Click" />

<TextBlock Text="Capture" Margin="0,10" />

<Button Content="Save snapshot" HorizontalAlignment="Stretch" Click="Save_Click" />

<TextBlock Text="Skeleton" Margin="0,10" />

<ToggleButton Content="Toggle Skeleton" HorizontalAlignment="Stretch"

Checked="Skeleton_Checked" Unchecked="Skeleton_Unchecked" />

</StackPanel>

<controls:KinectViewer Grid.Column="1" x:Name="viewer" Margin="10"/>

</Grid>

</Grid>

</Window>

This is the content of MainWindow.xaml.cs:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Data;

using System.Windows.Documents;

using System.Windows.Input;

using System.Windows.Media;

using System.Windows.Media.Imaging;

using System.Windows.Navigation;

using System.Windows.Shapes;

using LightBuzz.Vitruvius.Controls;

using LightBuzz.Vitruvius.Gestures;

using LightBuzz.Vitruvius;

using Microsoft.Kinect;

namespace VitruviusColor

{

/// <summary>

/// Interaction logic for MainWindow.xaml

/// </summary>

public partial class MainWindow : Window

{

KinectSensor _sensor;

MultiSourceFrameReader _reader;

PlayersController _playersController;

bool _displaySkeleton;

public MainWindow()

{

InitializeComponent();

_sensor = KinectSensor.GetDefault();

if (_sensor != null)

{

_sensor.Open();

_reader = _sensor.OpenMultiSourceFrameReader(FrameSourceTypes.Color | FrameSourceTypes.Depth | FrameSourceTypes.Infrared | FrameSourceTypes.Body);

_reader.MultiSourceFrameArrived += Reader_MultiSourceFrameArrived;

_playersController = new PlayersController();

_playersController.BodyEntered += UserReporter_BodyEntered;

_playersController.BodyLeft += UserReporter_BodyLeft;

_playersController.Start();

}

}

private void Window_Unloaded(object sender, RoutedEventArgs e)

{

if (_playersController != null)

{

_playersController.Stop();

}

if (_reader != null)

{

_reader.Dispose();

}

if (_sensor != null)

{

_sensor.Close();

}

}

private void Color_Click(object sender, RoutedEventArgs e)

{

viewer.Visualization = Visualization.Color;

}

private void Depth_Click(object sender, RoutedEventArgs e)

{

viewer.Visualization = Visualization.Depth;

}

private void Infrared_Click(object sender, RoutedEventArgs e)

{

viewer.Visualization = Visualization.Infrared;

}

private void Skeleton_Checked(object sender, RoutedEventArgs e)

{

_displaySkeleton = true;

}

private void Skeleton_Unchecked(object sender, RoutedEventArgs e)

{

_displaySkeleton = false;

viewer.Clear(); //calling this after setting _displaySkeleton=false to clear any previously drawn skeleton

}

void Reader_MultiSourceFrameArrived(object sender, MultiSourceFrameArrivedEventArgs e)

{

var reference = e.FrameReference.AcquireFrame();

// Color

using (var frame = reference.ColorFrameReference.AcquireFrame())

{

if (frame != null)

{

if (viewer.Visualization == Visualization.Color)

{

viewer.Image = frame.ToBitmap();

}

}

}

// Depth

using (var frame = reference.DepthFrameReference.AcquireFrame())

{

if (frame != null)

{

if (viewer.Visualization == Visualization.Depth)

{

viewer.Image = frame.ToBitmap();

}

}

}

// Infrared

using (var frame = reference.InfraredFrameReference.AcquireFrame())

{

if (frame != null)

{

if (viewer.Visualization == Visualization.Infrared)

{

viewer.Image = frame.ToBitmap();

}

}

}

// Body

using (var frame = reference.BodyFrameReference.AcquireFrame())

{

if (frame != null)

{

var bodies = frame.Bodies();

_playersController.Update(bodies);

foreach (Body body in bodies)

{

if (_displaySkeleton)

{

viewer.DrawBody(body);

}

}

}

}

}

void UserReporter_BodyEntered(object sender, UsersControllerEventArgs e)

{

// A new user has entered the scene.

}

void UserReporter_BodyLeft(object sender, UsersControllerEventArgs e)

{

// A user has left the scene.

viewer.Clear();

}

private void Save_Click(object sender, RoutedEventArgs e)

{

string path = System.IO.Path.Combine(Environment.GetFolderPath(Environment.SpecialFolder.MyPictures), "vitruvius-capture.jpg");

(viewer.Image as WriteableBitmap).Save(path);

}

}

}

Note the use of the Viewer control to display the selected image and skeleton, and the simple code to save a snapshot of the displayed image.

VitruviusKinect handles much of the detailed work required to display the Kinect streams and to track "players" as they enter and leave the scene.

Drawing to WPF Canvas and Image Controls

The Kinect sample programs offered by Microsoft and those presented in this workshop use various techniques to display the color, depth and infrared streams, skeletons, and face data.

The color, depth and infrared streams are visualized using WPF Image controls.

The skeletal and face data is visualized using a WPF Canvas control.

When presented with the task of drawing anything as part of a Kinect application, the developer must select an appropriate technique to use.

It would be helpful to have available several small examples that illustrate different techniques for accomplishing these tasks using Bitmaps, WriteableBitmaps, DrawingGroups, etc.

Here are a few links to get started:

Drawing with Shapes and Canvas

Shapes and Basic Drawing in WPF Overview - This topic gives an overview of how to draw with Shape objects. Available shape objects include Ellipse, Line, Path, Polygon, Polyline, and Rectangle. The Canvas panel is a particularly good choice for creating complex drawings because it supports absolute positioning of its child objects.

The Canvas Class - Defines an area within which you can explicitly position child elements by using coordinates that are relative to the Canvas area. A Canvas contains a collection of UIElement objects, which are in the Children property.

Create Shapes Dynamically in WPF - A Shape is a type of UIElement that enables you to draw a shape to the screen. It provides us with a large arsenal of vector graphic types such as Line, Ellipse, Path and others and because they are UI elements, Shape objects can be used inside Panel elements and most controls.

DynamicShapes.zip contains a simple WPF C# program that dynamically draws Rectangles, Lines an Ellipse and some Text on a Canvas. The Canvas control is contained in a Viewbox so that the Canvas maintains its proportions as the window is resized.

Drawing using the Drawing Class

The DrawingGroup Class - The DrawingGroup class represents a collection of drawings that can be operated upon as a single drawing. Use a DrawingGroup to combine multiple drawings into a single, composite drawing. Unlike other Drawing objects, you can apply a Transform, BitmapEffect, Opacity setting, OpacityMask, ClipGeometry, or a GuidelineSet to a DrawingGroup. The flexibility of this class enables you to create complex scenes. Because DrawingGroup is also a Drawing, it can contain other DrawingGroup objects.

Drawing Objects Overview - (Sample Program) A Drawing object describes visible content, such as a shape, bitmap, video, or a line of text. Different types of drawings describe different types of content.

The Drawing Class - The Drawing class is an abstract class that describes a 2-D drawing. This class cannot be inherited by your code. Drawing objects are light-weight objects that enable you to add geometric shapes, images, text, and media to an application. There are different types of Drawing objects for different types of content: GeometryDrawing, ImageDrawing, DrawingGroup, VideoDrawing, and GlyphRunDrawing.

The GeometryDrawing Class - The GeometryDrawing class draws a Geometry using the specified Brush and Pen. Use the GeometryDrawing class with a DrawingBrush to paint an object with a shape, with an DrawingImage to create clip art, or with a DrawingVisual.

The Geometry Class - Classes that derive from this abstract base class define geometric shapes. Geometry objects can be used for clipping, hit-testing, and rendering 2-D graphic data.

Drawings - How-To Topics - Contains sample programs demonstrating how to use Drawing objects to draw shapes, images, or text.

Displaying and Drawing Images using the Image Class

The Image Class - The Image class represents a control that displays an image. The Image class enables you to load the following image types: .bmp, .gif, .ico, .jpg, .png, .wdp, and .tiff.

The Image.Source Property - The Image.Source property gets or sets the ImageSource for the image. Thie property allows the "content" of the displayed Image to be changed.

The ImageSource Class - The ImageSource class represents a object type that has a width, height, and ImageMetadata such as a BitmapSource and a DrawingImage. This is an abstract class.

The BitmapSource Class - Code examples on this page demonstrate A) How to create a BitmapSource and use it as the source of an Image control, and B) How to use a BitmapSource derived class, BitmapImage, to create a bitmap from an image file and use it as the source of an Image control.

Drawing Bitmaps - DrawingImage and DrawingVisual - WPF provides multiple ways to convert vector drawings to bitmaps. Find out how DrawingImage and DrawingVisual work and when to use which. On the way we look at how to create 2D vector drawings.

The WriteableBitmap Class - The WriteableBitmap class provides a BitmapSource that can be written to and updated. Use the WriteableBitmap class to update and render a bitmap on a per-frame basis. This is useful for generating algorithmic content, such as a fractal image, and for data visualization, such as a music visualizer. This page contains an example example demonstrating how a WriteableBitmap can be used as the source of an Image to draw pixels when the mouse moves. Here you'll find sample code showing how to draw text on a WriteableBitmap.

Three-Dimensonal Graphics

Some applications require the skeleton data to be drawn in 3D, with animation. What is the best C# technique for this task?

DirectX Graphics and Gaming - Microsoft DirectX graphics provides a set of APIs that you can use to create games and other high-performance multimedia apps. DirectX graphics includes support for high-performance 2-D and 3-D graphics.

Direct2D - Direct2D is a hardware-accelerated, immediate-mode, 2-D graphics API that provides high performance and high-quality rendering for 2-D geometry, bitmaps, and text. The Direct2D API is designed to interoperate well with GDI, GDI+, and Direct3D.

Direct3D - Direct3D enables you to create 3-D graphics for games and scientific apps. Direct3D is a low-level API that you can use to draw triangles, lines, or points per frame, or to start highly parallel operations on the GPU.

Drawing 3D Objects Using WPF 3D

Microsoft's WPF 3D Graphics Overview - The 3D functionality in Windows Presentation Foundation (WPF) enables developers to draw, transform, and animate 3D graphics in both markup and procedural code. Developers can combine 2D and 3D graphics to create rich controls, provide complex illustrations of data, or enhance the user experience of an application's interface. 3D support in WPF is not designed to provide a full-featured game-development platform. This topic provides an overview of 3D functionality in the WPF graphics system.

Introduction to WPF 3D - Demonstrates displaying a 3D cube in a Windows WPF application.

WPF 3D Primer - Develops a Windows application that displays, rotates and zooms a 3D soild.

Example of 3D Graphics in WPF - This article discusses how to create a 3D Triangle in WPF. Triangles are the basic building block of more complex 3D objects.

WPF Solid Wireframe Transform - This article contains a sample program that draws either a solid model or a wireframe model of a 3D cube. It uses 3dtool.dll available here.

WPF The Easy 3D Way - Contains a good discussion and code for a WPF 3D program that displays a rotating cube.